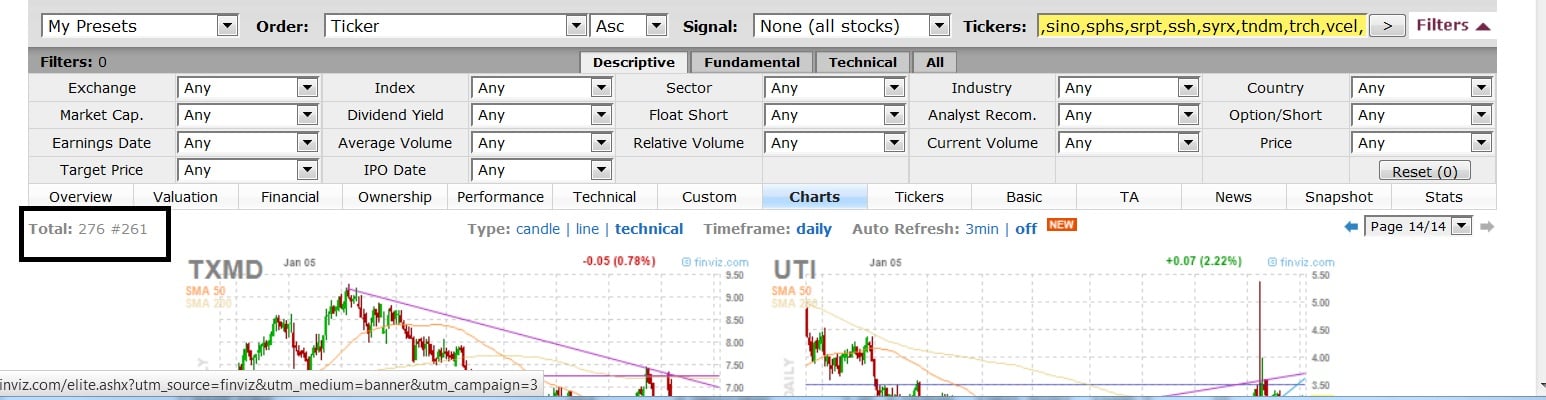

I’ve started to recover the OABOT and recreate the consolidation score. It turns out that subscribing to finviz elite does allow immediate updates on stock scores as long as you are logged in at the time in internet explorer. (firefox doesn’t work for some reason, may be a settings problem) I’m not as mad at them as I was for shutting off the export function without a warning, but it is a little bit of a hassel to log in first and redo things as well as pay the dollars for subscription but so far no one else that I’m aware of has really stepped up and offered nearly as much data to compile and sort through and set up formulas to in a way that I can export to excel and have formulas pre set to adjust the way I want to. Originally http://investexcel.net/ had a spreadsheet that would pull all the data from finviz with the push of a button. Basically I altered it by creating a formula that would pull from the tab that had the data and then I set up my own formulas. I wouldn’t really know how to create a formula to do that automatically with another free or paid data source.

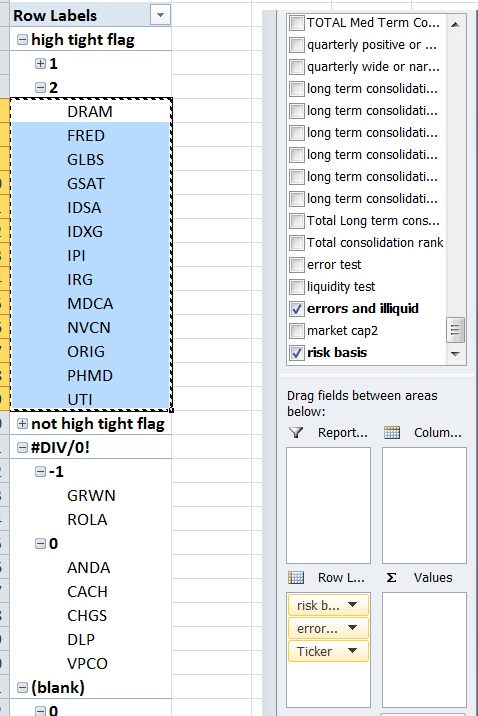

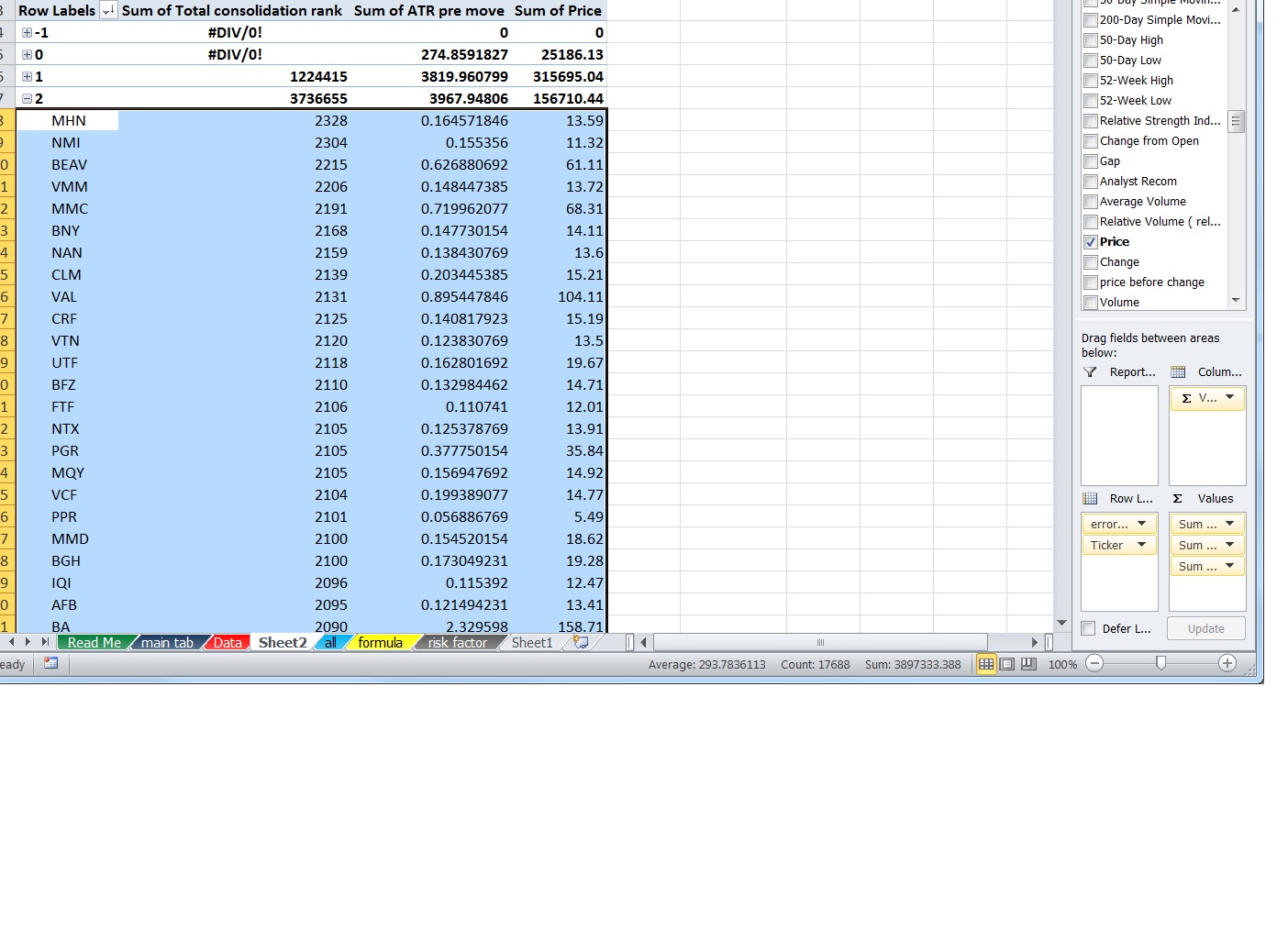

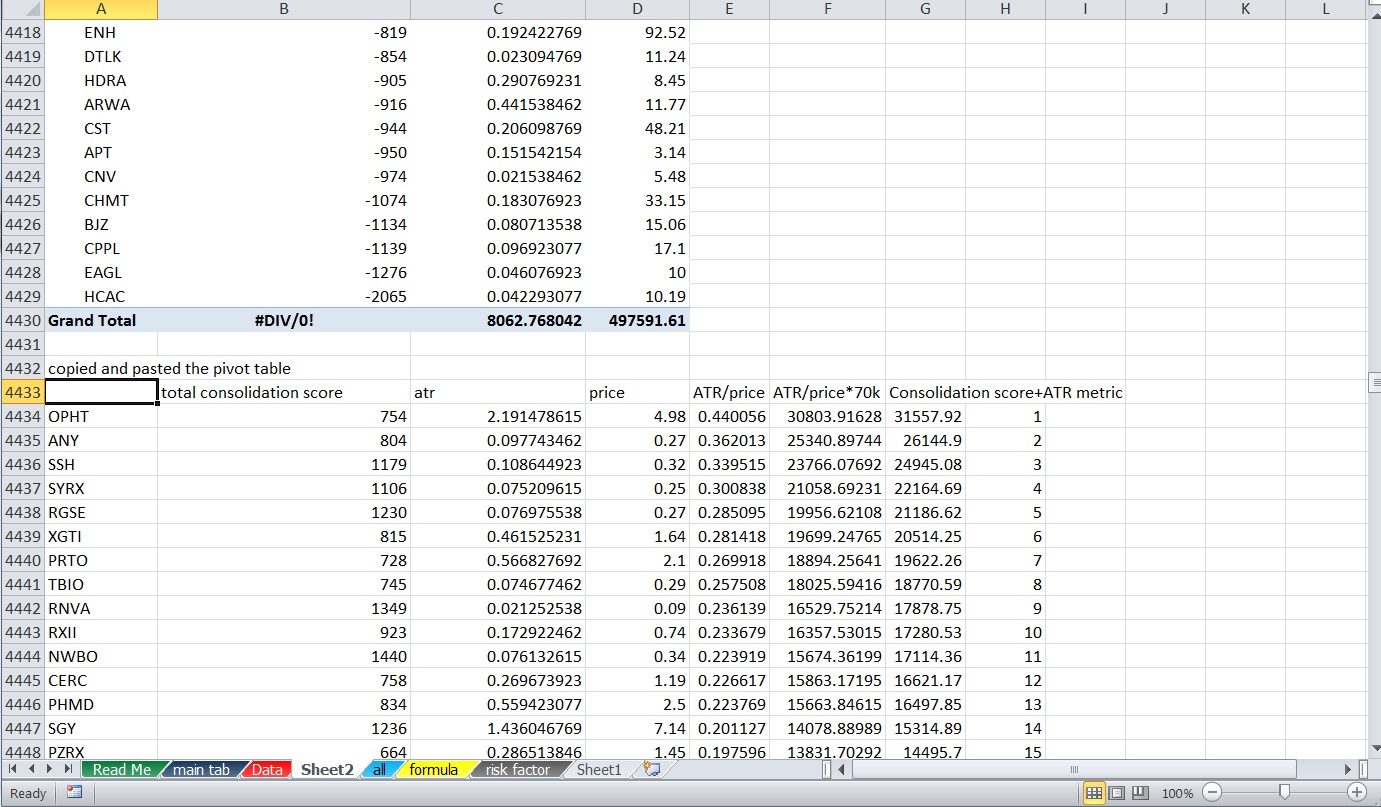

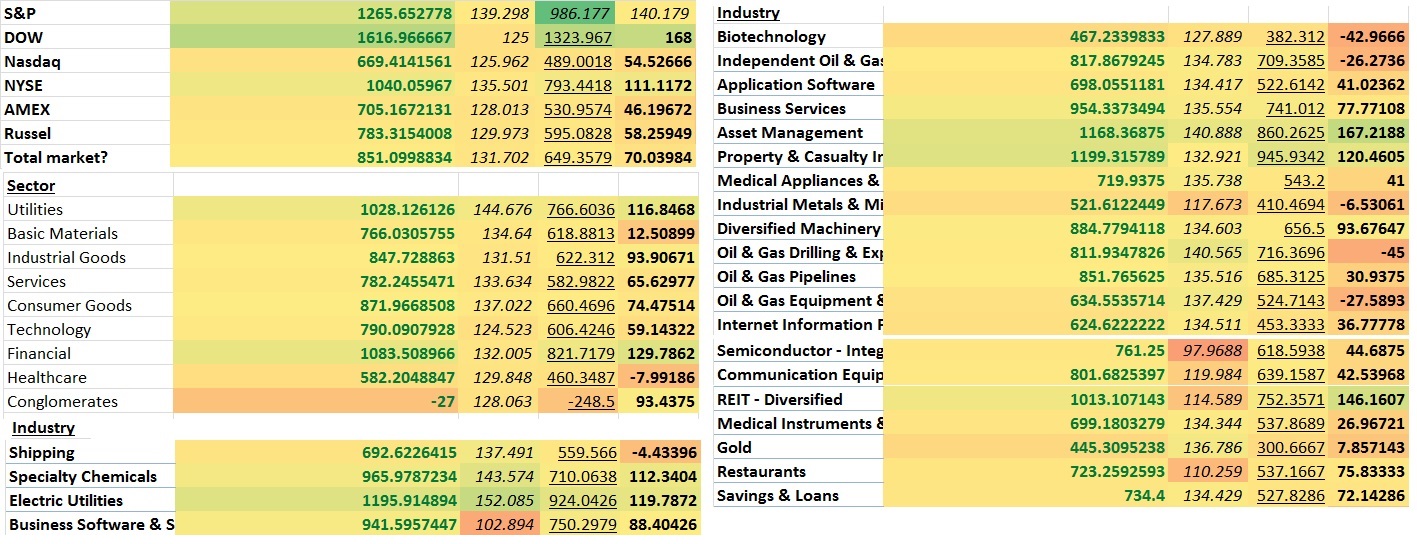

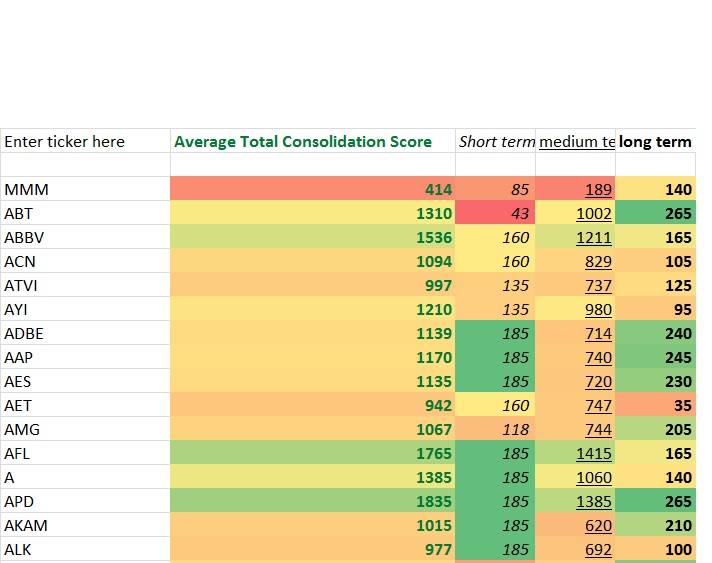

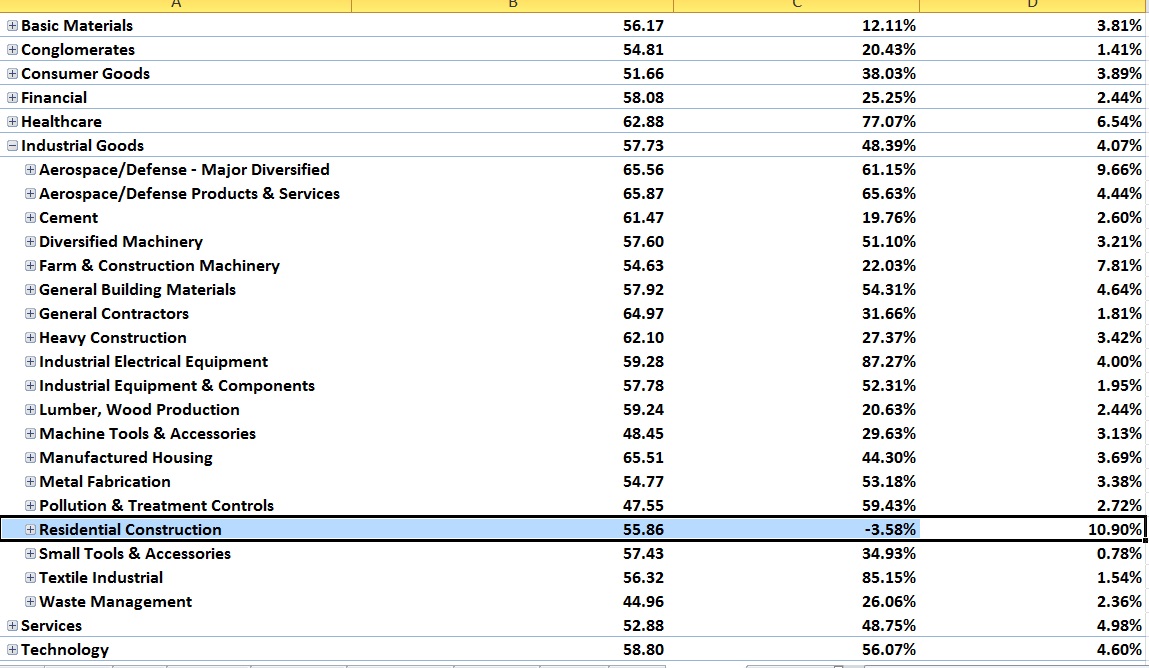

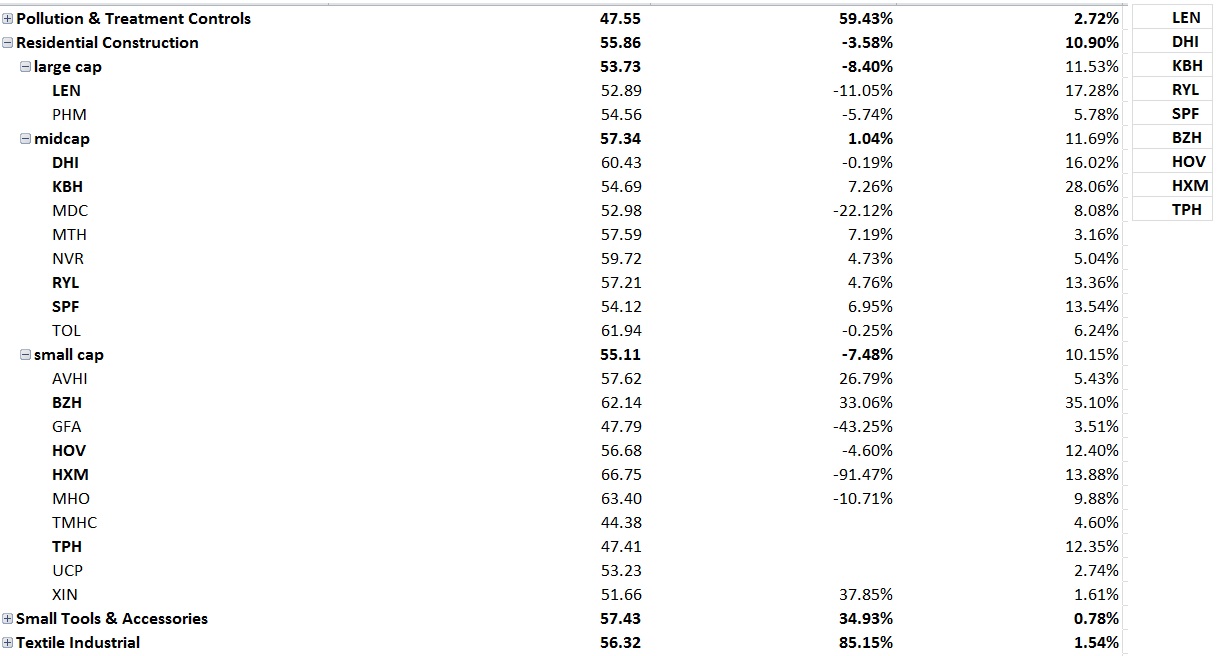

There’s a list of 400 stocks that I pulled based upon the top “Intermediate consolidation score” as I applied via my OABOT spreadsheet. From those I manually scan and pull all the ones I like. I pulled 39 names. I probably could have pulled more if I wanted to grab the ones that all have the similar “bull flag” pattern like WABC, but I decided not to

For some reason I’m having trouble posting the link so for now you have to copy and paste it.

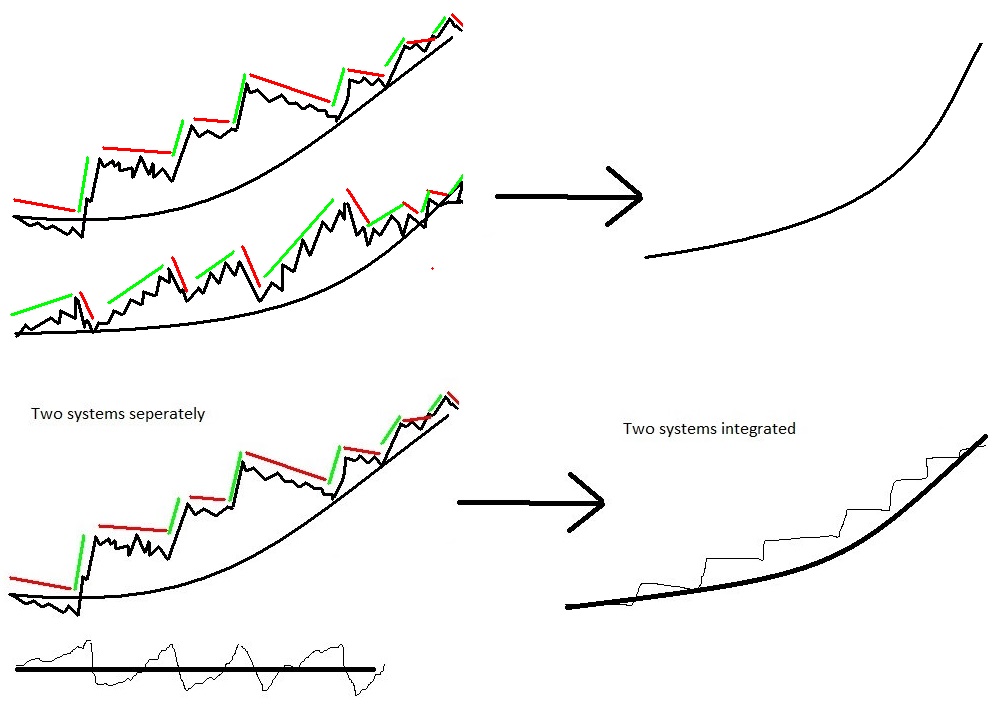

What I will be doing next is going through a process attempting to improve either the quality of the results or the quantity of results from the 400 I list.

The process will look something like this:

1)First run the scan as currently exists.

2)Change something about the scan’s formula.

3)Compare the results and repeat until the results improve.

I want to basically start with the extremes on each end and move towards the middle until I can’t seem to improve it or it no longer is noticeable or worth the improvements.

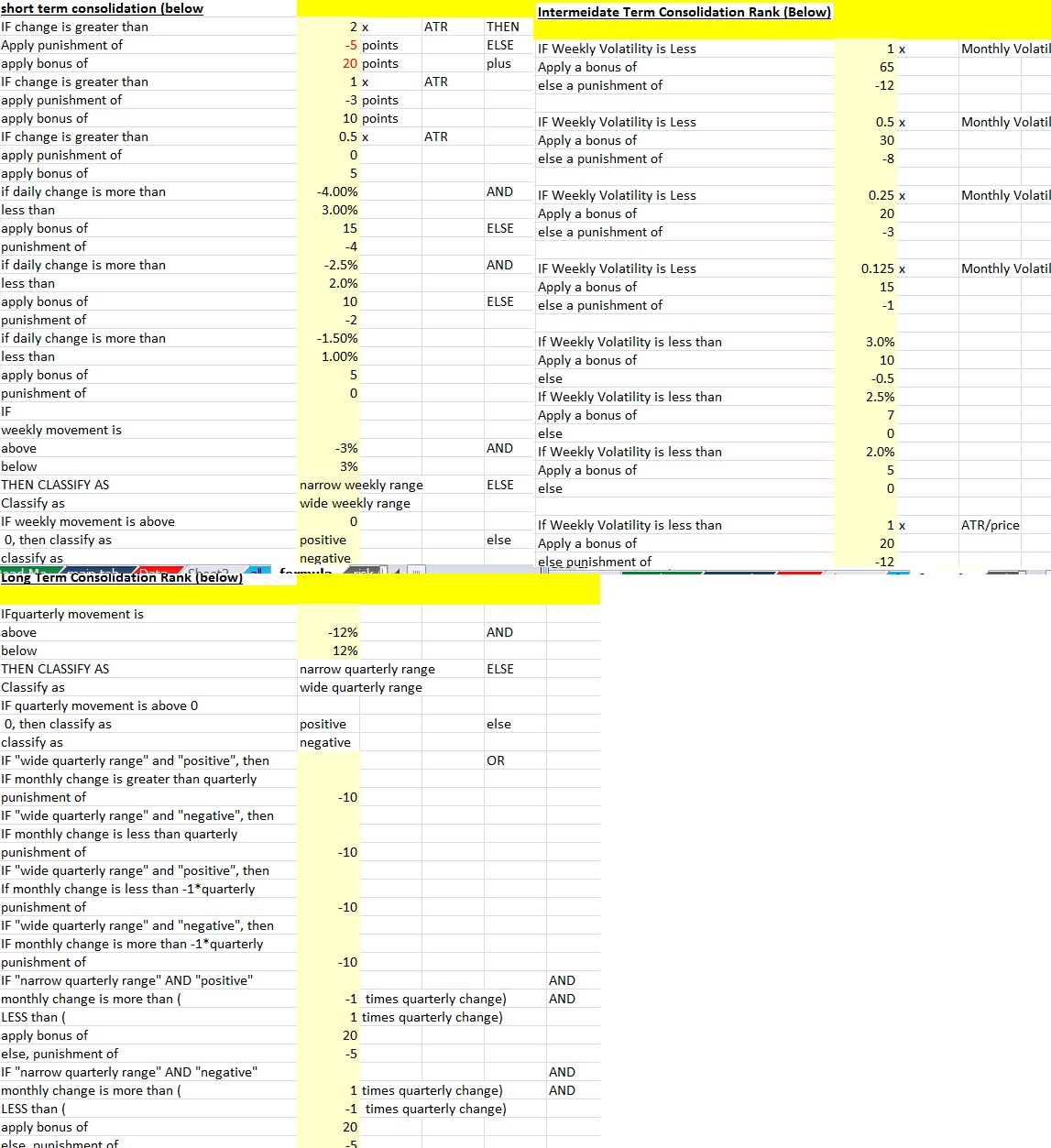

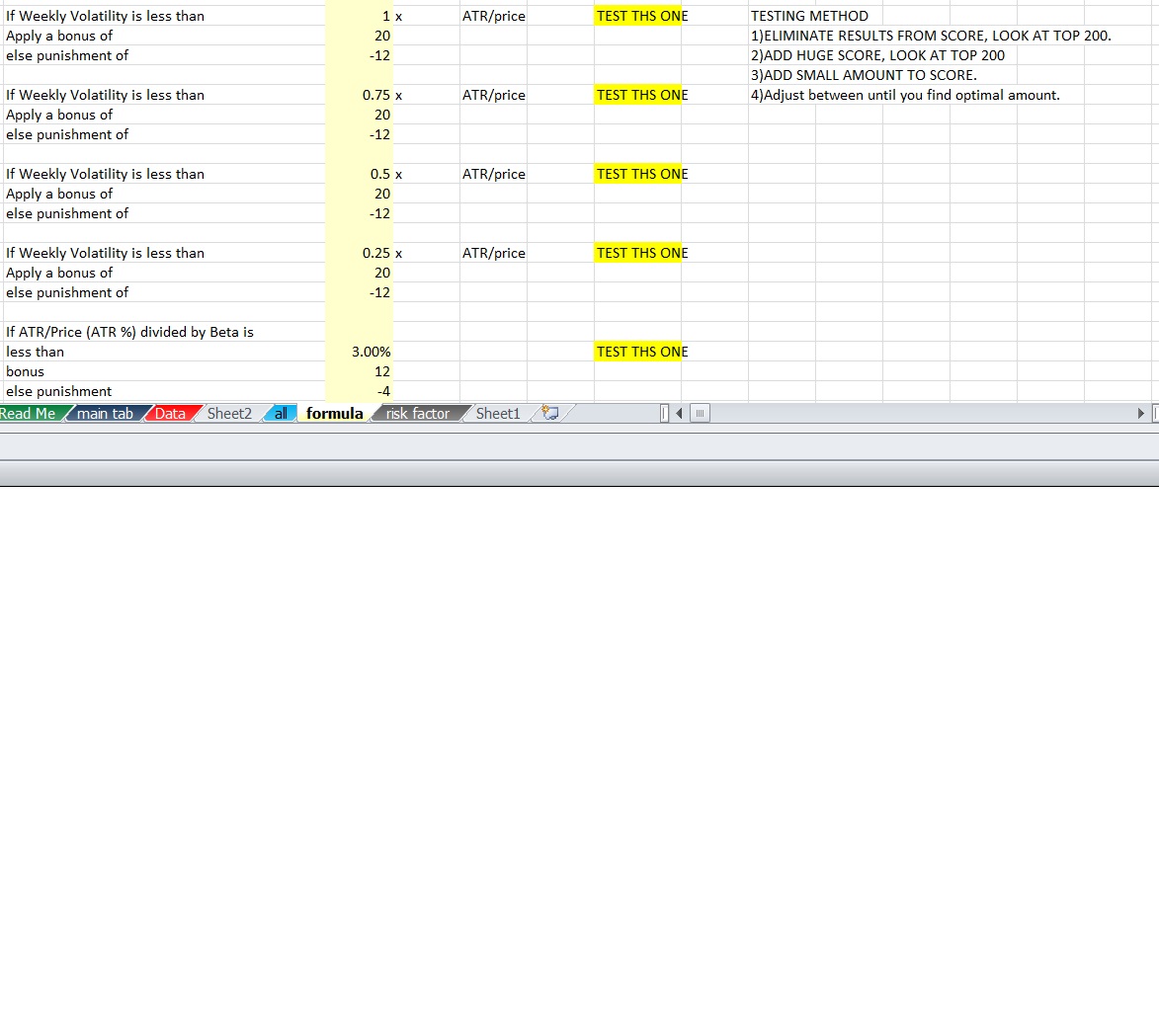

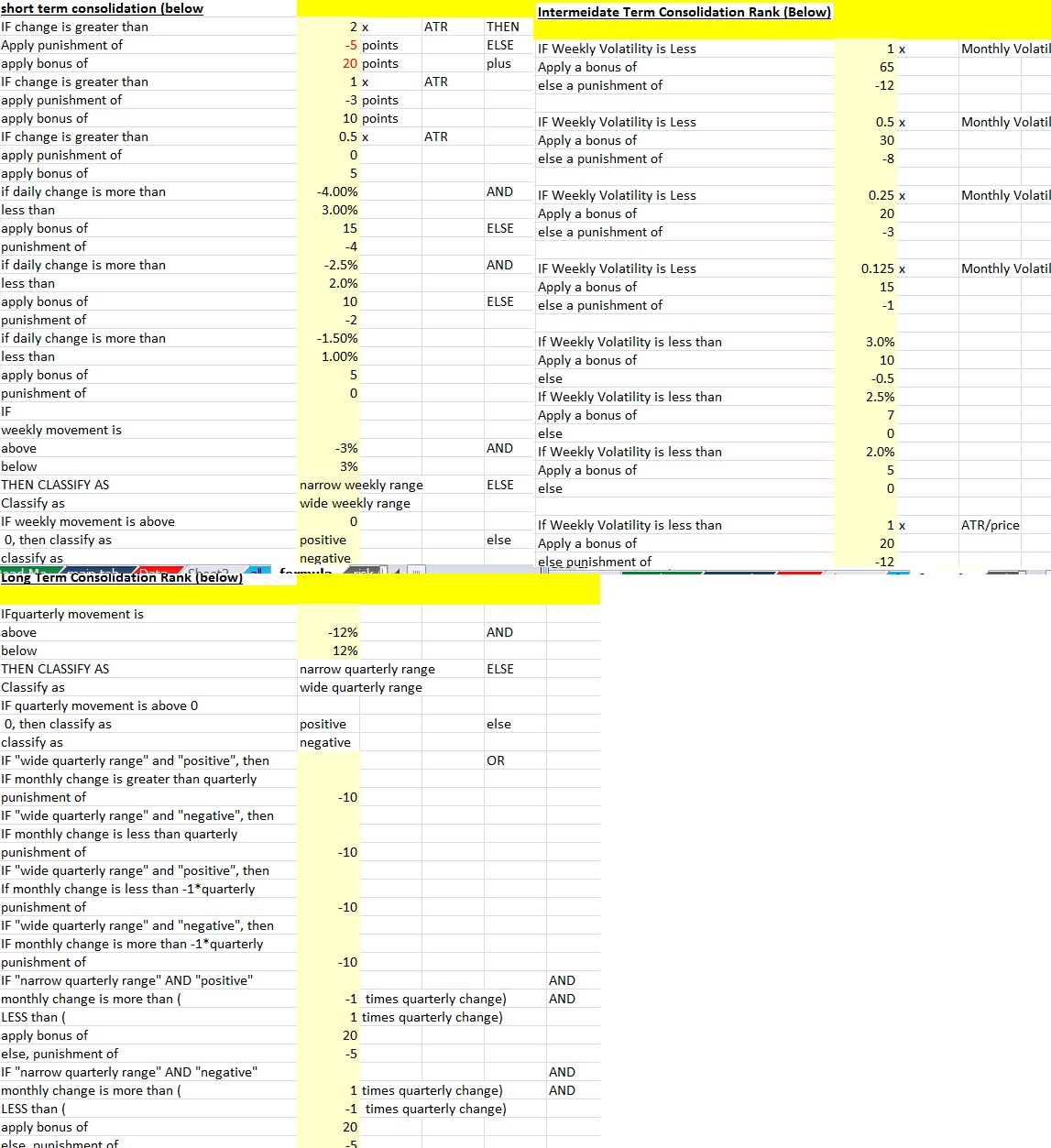

Fortunately the new spreadsheet is better designed for quick adjustments. I created a formula tab that allows me to change lots of numbers in the formula without scanning through computer gibberish to do so.

All I have to do is change a number and all the formulas and results change to reflect these changes.

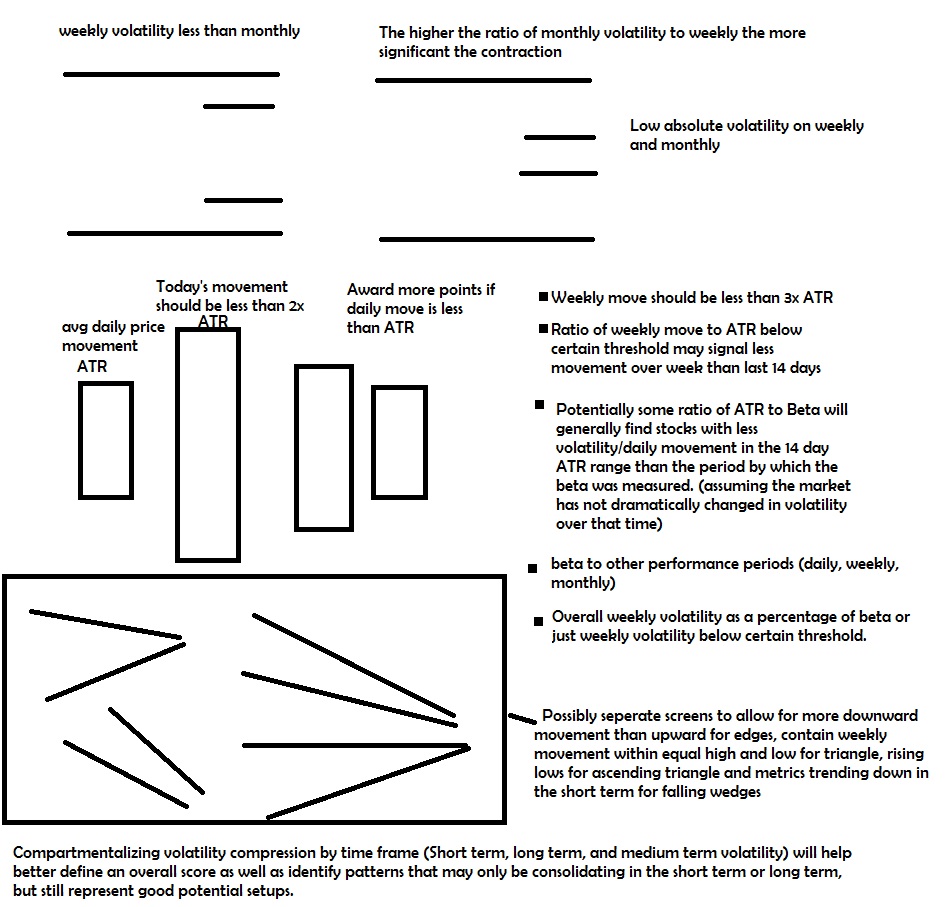

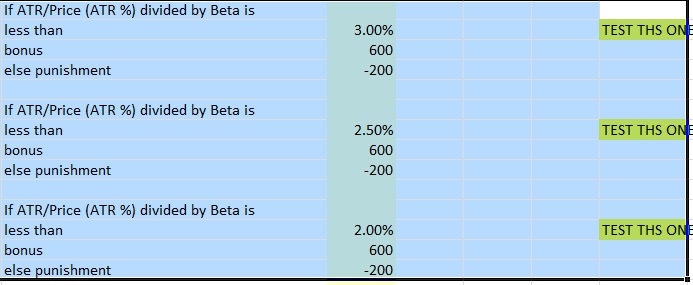

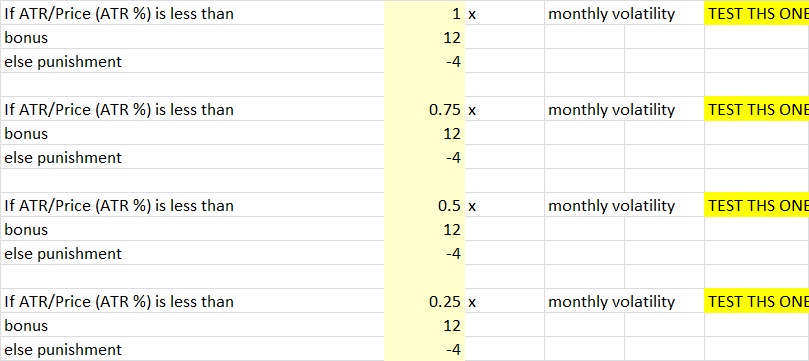

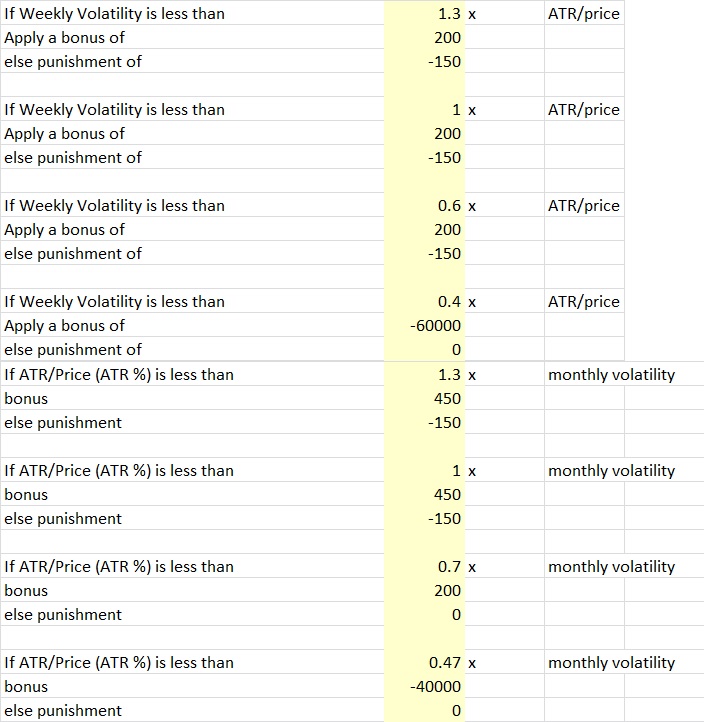

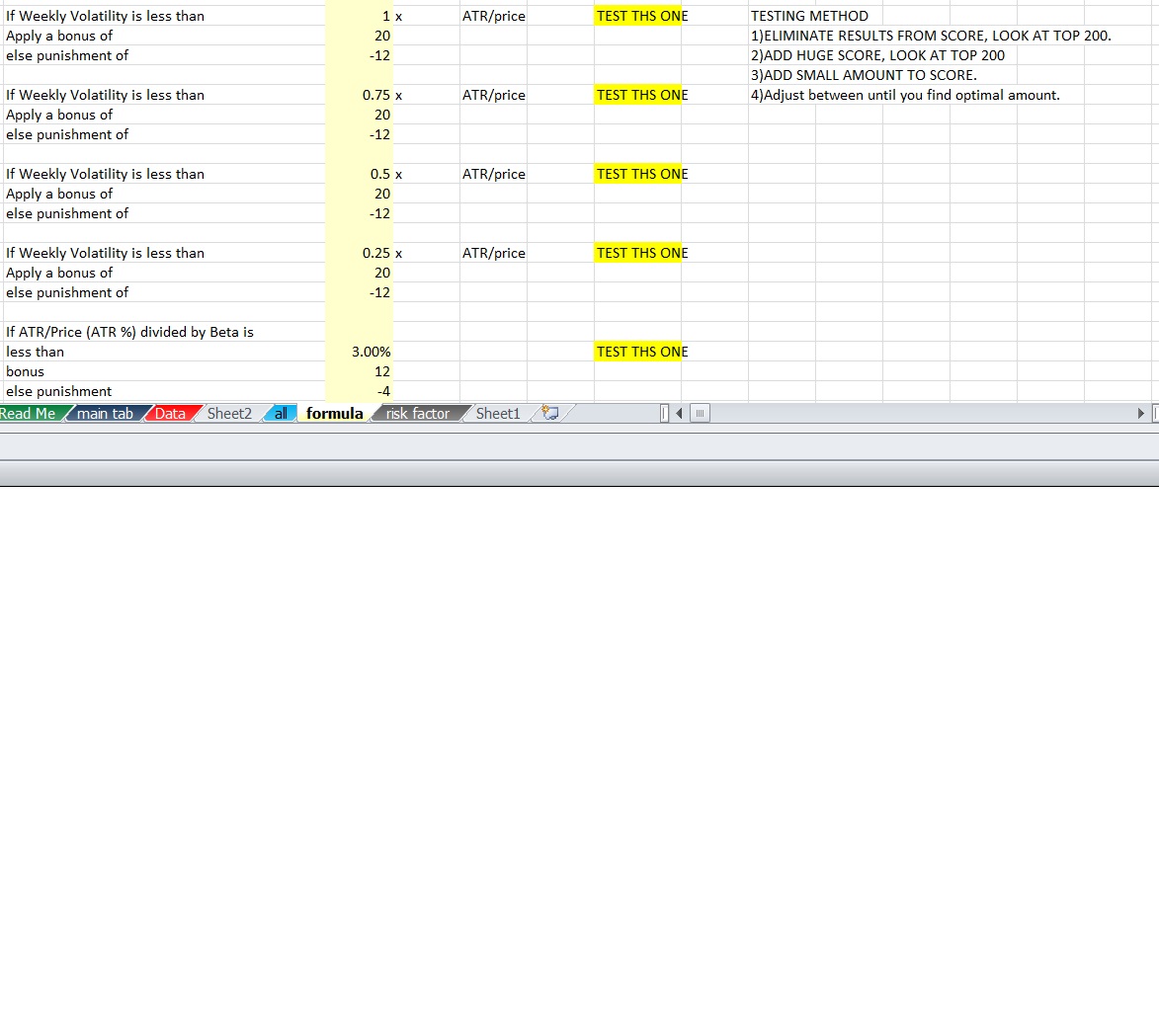

One criteria is that the stocks weekly volatility is less than a multiple of the ATR/price (% daily movement average), it awards points, and if it fails to meet the criteria a punishment (negative points) is applied. I can adjust the reward, the multiplier and the punishment for failing to try to remove stocks or include stocks in the top 400 that wouldn’t otherwise make it. The goal is to change the formulas dramatically and pay particularly close attention to the stocks at the top of the ranking to see how they change if I change the number to zero so no points are rewarded and then change it to 200 or something extreme so it makes up a larger percentage of the total intermediate term score. By doing this I’m better able to see if this criteria is a good criteria to even have at all, or if it should be removed completely, or if it should be one of the primary indicators used. Most likely it will be somewhere between and that’s what I’m after.

The ATR measures a stock’s daily movement each day over the course of 14 days to come up with an average. In theory if the weekly volatility is less, it means the last 7 days are less volatile than the previous 7 but that may not necessarily always be the case depending on how the volatility is calculated…

That will be the first criteria I test. For now I have 4 separate scores so that a stock that has weekly volatility less than 1x ATR/price is awarded points. A stock that has less than .75x ATR, .5x ATR and .25x ATR are given additional accumulative awards.

Initially as is I got 400 stocks narrowed down to these 39

http://finviz.com/screener.ashx?v=211&f=ind_stocksonly&t=ABB,ABT,ACXM,AFL,AMGN,AMZN,ARCW,AVID,BAP,BAX,BDX,BIDU,BIIB,BPMX,BPY,CHEK,CPE,CNX,CRTO,CZZ,DLPH,EGN,EGLE,ERF,FIS,FOX,HDB,HELE,HRB,HSIC,INFO,LVS,MLM,NWSA,QIWI,RDCM,TAP,TEP,TERP&r=21

original 400 stocks

http://finviz.com/screener.ashx?v=211&f=ind_stocksonly&t=A,ABBV,ABCB,ABEV,ABT,ACAS,ACET,ADI,ADP,AFG,AIQ,AIZ,ALL,ALV,AMAT,AMGN,AMZN,ANCX,APLE,APOL,ARCC,ARCX,ASML,AWH,AXL,AXS,AXTA,AZN,BANR,BAP,BAX,BBVA,BCH,BCR,BEAV,BGH,BHB,BHE,BHLB,BIDU,BKEP,BKU,BMO,BNCN,BP,BR,BRCD,BRKL,BSX,BUD,CASS,CBK,CBS,CBU,CEB,CFD,CHCO,CHCT,CHMI,CHTR,CHU,CIK,CIM,CLC,CM,CPE,CPF,CPL,CRH,CRL,CRTO,CS,CTF,CVBF,CZZ,DISH,DLPH,DOOR,DTLK,E,EAD,ECF,EDE,EFUT,EGLE,EGN,EQM,ERC,ERF,ESLT,ESRX,EV,EVER,EVV,EWBC,EXAR,FFIC,FFIN,FIBK,FIS,FMBI,FMS,FMX,FOX,FRC,FTS,FUN,GGN,GLO,GLQ,GMED,GMLP,GMS,GNCMA,GTWN,HAR,HCA,HD,HDB,HELE,HES,HNP,HOMB,HPT,HRB,HRC,HSIC,HWKN,IFF,IHG,INDB,INFO,INWK,IQNT,JACK,JRS,KAR,KELYA,KHC,KLAC,KZ,L,LBTYA,LEN,LFC,LIOX,LKFN,LMNR,LOCK,LPL,LRCX,LSCC,LTXB,LUX,LVLT,LVS,MBT,MBVT,MBWM,MCBC,MCK,MDLZ,MED,MEG,MENT,MFS,MHG,MHK,MLM,MMAC,MMM,MMP,MO,MTSC,MTW,NANO,NBW,NCIT,NCMI,NCOM,NEWS,NFLX,NICE,NKX,NMR,NMZ,NNC,NPO,NRO,NSA,NSC,NSR,NWBO,NWSA,NYLD-A,OKSB,OMI,ON,ONB,OPY,ORA,ORAN,ORM,PATK,PBCT,PDCO,PEGA,PHF,PHG,PINC,PLAY,PLXS,PM,PNFP,PRGS,PSX,QEP,QGEN,QIWI,QTS,RATE,RDCM,RDN,RDS-A,RDS-B,REN,RNR,RVLT,RY,SASR,SBCF,SBGI,SCI,SCNB,SEIC,SEMG,SEP,SFL,SFNC,SIVB,SIX,SNBC,SNN,SNY,SPLP,SRLP,SSB,SSL,STAA,STM,STO,STX,SYK,SYNC,SYT,T,TAP,TBI,TCB,TD,TEF,TEP,TERP,TEX,TFX,TI,TI-A,TIME,TISI,TMH,TMK,TRGP,TUBE,TV,TVPT,TWX,TXN,UAM,UBSI,UCBI,ULH,UMBF,UN,UNP,USB,USFD,VAR,VASC,VBTX,VCF,VIP,VLO,VMC,VRTU,VZ,WABC,WBK,WCN,WDC,WDFC,WGBS,WHF,WM,WOOF,WPPGY,WSBC,WST,WTFC,WU,WWAV,XEC,XL,XLNX,AAPL,ABB,ACGL,ACXM,AEG,AFH,AFL,AGO,AIMC,AJG,ALSN,AMBC,AMCX,AMD,AMED,AMN,AMTD,AMX,ANW,AOI,APC,APFH,APOG,ARCW,ASX,AVGO,AVID,AWR,AXP,BATS,BBG,BBT,BCS,BDX,BERY,BG,BHBK,BHP,BIIB,BIO,BK,BKMU,BLE,BLVD,BMY,BNS,BOH,BPFH,BPL,BPMX,BPOP,BPY,BRK-B,BRO,BRSS,BT,BUSE,BWINB,BXS,BYD,C,CABO,CATY,CB,CBB,CET,CFG,CFR,CHEK,CHFC,CHH,CHMT,CIE,CIT,CKH,CLB,CLH,CLSD,CMA,CMCO,CMRE,CNA,CNHI,CNI,CNO,CNV,CNX

The next stage will be to see if the quality and/or amount of names I pull improves.

So here is the first criteria

The next criteria I went +200 and -150 for all 4 of these criteria. The one below involving ATR/price divided by beta is a separate metric that I will test another time.

I can see which is more important later and if an even score or exponential score is best and whether scoring something like .10x or less than 2x would be more appropriate. Is it better to use this as something to make sure the recent week isn’t totally out of control or to actively seek weeks that are certainly quiet even if it eliminates some that aren’t super quiet according to this criteria but are still reasonable? We’ll eventually find out. For now we want to know how powerful this SET of criteria is together and then we can make adjustments to figure out which part matters more.

So with 200/-150 as the scores I came up with a new list of 400 and a new grouping. I maybe should have went with 1000/-500 because there was still an overlap of names, but that’s okay.

By doing this I actually got 59 names. I’m not sure if this is because there was a single signal that was more dominant or if this is a sign that this indicator needs to be scored higher or if there’s just more value in multiple scans. Certainly there was some overlap.

http://finviz.com/screener.ashx?v=211&f=ind_stocksonly&t=ABB,ABCD,ABCO,ABM,ABT,ADI,ADSK,AFL,AMDA,AMGN,AYA,BAX,BDX,BF0B,BIIB,BKEP,BLE,BLL,BPY,BSFT,BSAC,CBMG,CHEK,CLSD,CMTL,CPE,CRAY,CZZ,EGLE,FOX,GIB,GNK,GSL,HDB,HELE,HRB,HSIC,KMB,LVS,MANT,MPEL,NEWR,PFE,PII,QIWI,Q,QTNA,RDCM,ROKA,SJM,SSTK,TAP,TDG,TERP,THLD,TEP,TMO,VWR,WPT,WYNN&r=21

I think what I will do is remove the tickers I have in common and compare the ones that are different. After doing this I find that not only does the 200/-150 score develop more names, but I like them better. So that means the best solution is probably closer to 200/-150 than 20/-12. We don’t know if the exact solution should be higher than 200/-150 or more like 115/-85. I also see advantages for multiple screens. Nevertheless, for now the 200/-150 stays and we will look to test something like 400/-300 and 100/-75 to see if either of those improves the results in my opinion. If the results are not significantly better I’ll probably just pick one and stop there. The other thing is, I may circle back to this after I change other variables for the better, so for now I’m just trying to get a quick approximation of about where the better results lie. Later I can test punishing the stocks more than I reward or only rewarding with no punishment for the stocks that fail. I can also test scoring stocks that meet every criteria less than the previous criteria or more than the previous (for example if weekly also is .25x less than ATR do I score 1000/-500 or something like 20/-5.

As you can see there’s a lot to test, so I’m taking sort of a pragmatic/split testing system based approach and trying to quickly move on to other things to keep improving this. I wasn’t nearly this efficient the first time I made the score and making changes was more difficult so I should be able to really improve this tool rather quickly by running a similar process.

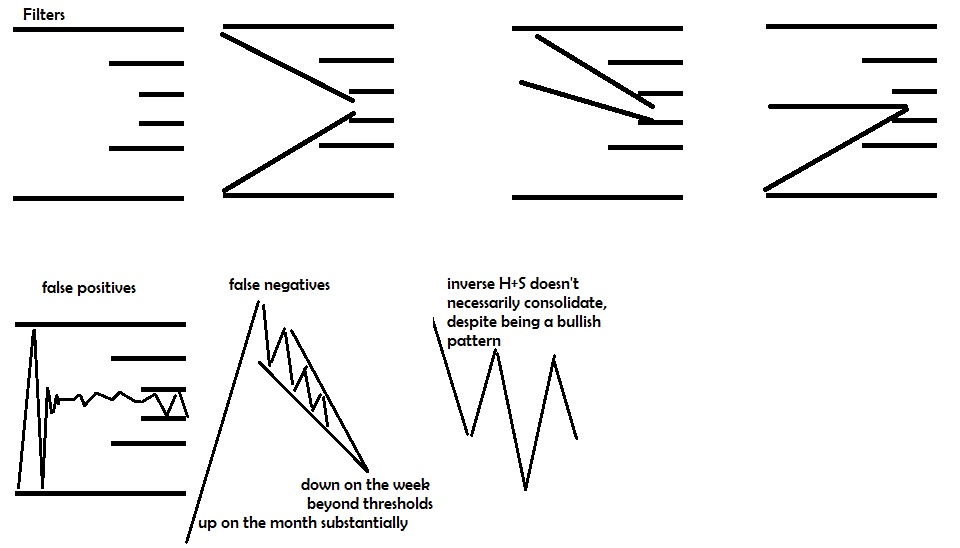

For now the short term consolidation score doesn’t need any changes as it serves it’s purpose of making sure the stock isn’t breaking out or down on the daily basis without any false positives (although there may be false negatives that get filtered out that are still good to trade). But I want to keep improving different variables in the intermediate term and then get to the long term consolidation rank and seek improvements there as well.

Comments »